By Allan Roberts

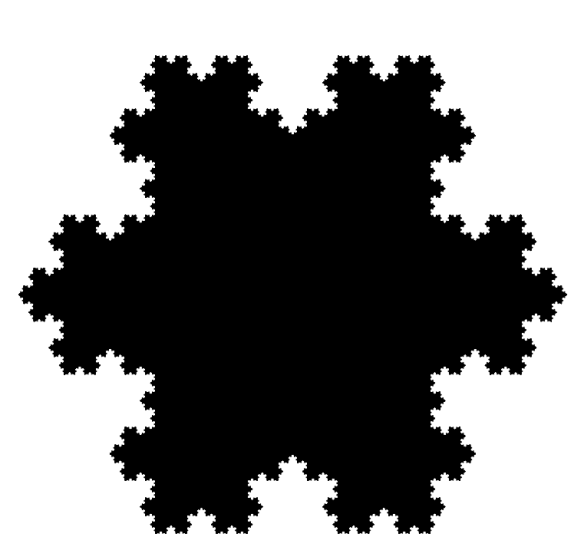

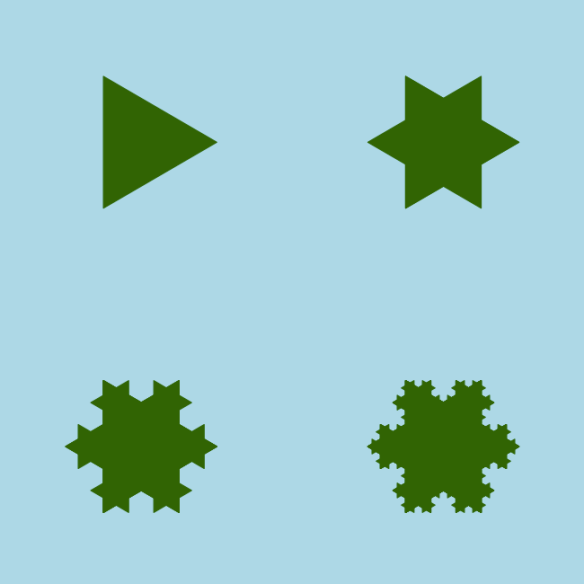

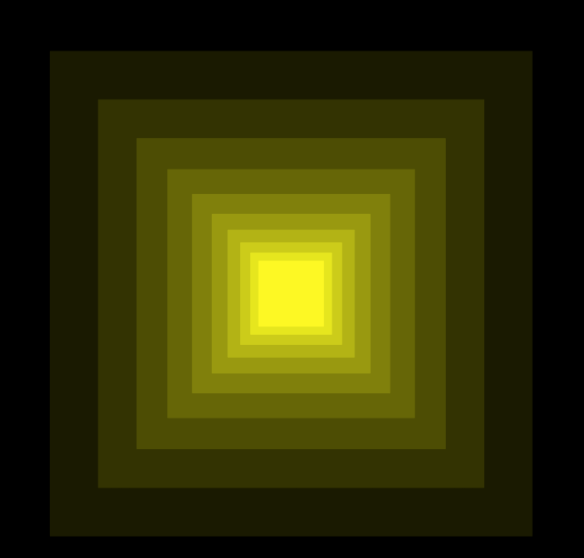

This post uses the graphics package ggplot2 (Wickham, 2009) to illustrate a new method of making an image of the Sierpiński Carpet, which was featured in a previous post (Fractals with R, Part 2: the Sierpiński Carpet). The method used to make the matrix representing the fractal is the same; again, the algorithm is essential the same as the described in described in Weisstein (2012), but implemented in R. In this post, I have used the graphics package ggplot2 to display the image. The ggplot2 layer “geom_tile” produces output much like the function “image” in the base graphics package. View ports made with the “grid” package are used to make the four panels of the plot shown in figure 1; one of the advantages of ggplot2 is that it makes it relatively easy to work with the grid package in this way. Because the function “ggplot” takes a data frame as an argument, some extra work was required to turn the matrix representing an iteration of the fractal into a data frame with X and Y columns for the positions of cells, and an indicator column I to represent the colour of the cell. Running the script provided below requires that you have the “ggplot2” package installed; if you have an internet connection, this can be done by entering install.packages(“ggplot2”) on the R command line. (The grid library should come already installed with the basic installation of R.)

Reference

H. Wickham. ggplot2: elegant graphics for data analysis. Springer New York, 2009.

Weisstein, Eric W. “Sierpiński Carpet.” From MathWorld– A Wolfram Wed Resource Accessed Oct. 22, 2012: http://mathworld.wolfram.com/SierpinskiCarpet.html

R Script

#Written by Allan Roberts, Feb 2014

library(ggplot2)

library(grid)

SierpinskiCarpet <- function(k){

Iterate <- function(M){

A <- cbind(M,M,M);

B <- cbind(M,0*M,M);

return(rbind(A,B,A))

}

M <- as.matrix(1)

for (i in 1:k) M <- Iterate(M);

n <- dim(M)[1]

X <- numeric(n)

Y <- numeric(n)

I <- numeric(n)

for (i in 1:n) for (j in 1:n){

X[i + (j-1)*n] <- i;

Y[i + (j-1)*n] <- j;

I[i + (j-1)*n] <- M[i,j];

}

DATA <- data.frame(X,Y,I)

p <- ggplot(DATA,aes(x=X,y=Y,fill=I))

p <- p + geom_tile() + theme_bw() + scale_fill_gradient(high=rgb(0,0,0),low=rgb(1,1,1))

p <-p+ theme(legend.position=0) + theme(panel.grid = element_blank())

p <- p+ theme(axis.text = element_blank()) + theme(axis.ticks = element_blank())

p <- p+ theme(axis.title = element_blank()) + theme(panel.border = element_blank());

return(p)

}

A <- viewport(0.25,0.75,0.45,0.45)

B <- viewport(0.75,0.75,0.45,0.45)

C <- viewport(0.25,0.25,0.45,0.45)

D <- viewport(0.75,0.25,0.45,0.45)

quartz(height=6,width=6)

print(SierpinskiCarpet(1),vp=A)

print(SierpinskiCarpet(2),vp=B)

print(SierpinskiCarpet(3),vp=C)

print(SierpinskiCarpet(4),vp=D)